Models within a model: in-context learning pushes boundaries of LLM

Large language models, better known by the acronym LLM, have recently been at the forefront of the AI community's mind. A chatbot capable of communicating convincingly, precisely, and surprisingly "human-like" with a human has considerable potential. LLMs are not a novelty. We are just finding new and new uses as their capabilities improve. That is, quite dramatically in recent months.

Before we explain what exactly in-context learning is, let’s briefly examine how the (deep) neural networks, which LLM is built on, function. We’ll look closer at one of today’s most sophisticated neural networks, OpenAI’s popular GPT, and reveal how advanced algorithms can mimic humans so effectively. And why we don’t actually know exactly how they arrive at their outputs.

Large language models utilize vast amounts of data, often a substantial part of the Internet, filtered in various ways to make sense of the data for algorithmic needs. The LLM is then trained on these datasets to be able to serve a given purpose – as a chatbot, translator or other services – and produce plausible and meaningful outputs. Although the primary use case still concerns languages, LLMs have surpassed this limiting category.

The methods by which it achieves this are numerous. These include, for example, self-supervised pretraining, where the model learns to fill in missing words in sequences, or transfer learning, where we adapt an already completed model to solve a similar task. During LLM learning, so-called regularization ensures that the language model does not rely too heavily on specific training data.

The structure of LLMs is based on so-called feedforward neural networks, where the signal propagates through layers of artificial neurons from input to output. They thus resemble real biological neural networks. For example, LLMs learn that certain words are often used together and that their order in a sentence is not random but has rules. They also discover that some topics, for example, are closely related (often appear together). The more data the neural network gets, the better it can be trained. The process really does resemble learning.

LLMs work so well (usually) because they are optimized for specific tasks. An example would be a language model designed to predict the next word in a sentence (for example, when typing on a virtual mobile phone keyboard). During learning, we can correct the model if it misses a word correctly – making minor adjustments to the weights of the neurons in the network to reduce the prediction error in the output.

The concept itself is quite simple and easy to understand. However, due to the high level of abstraction, it is difficult to look under the hood, i.e. to understand exactly how a neural network arrives at its outputs because there are many neurons and (as with the brains of living organisms) it is not easy to trace how any given neuron contributes to the outcome precisely.

Surprising abilities of large language models

A very interesting situation arises when we look at some of the surprising abilities of these modern neural networks. Researchers have recently noticed a curious phenomenon: so-called in-context learning. It is a phenomenon that experts do not yet fully understand – the LLM can be effectively applied to perform a new surprise task without being trained to do so (i.e., to change the model’s weights directly; see box 2). The LLM can adjust its behaviour during so-called model inference, where we only give the model inputs and compute the output by forward propagating the signal through the layers of neurons.

It is part of so-called few-shot learning, a machine learning method that teaches a model to recognize new classes (e.g., objects) from only a small amount of training data. As a result, modern models can handle a much larger number of problems.

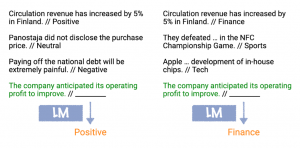

In this case, we give the LLM an input sentence with the expected answer at the end. It is fascinating that it can deduce the relationships and respond with the correct output.

Source: How does in-context learning work? A framework for understanding the differences from traditional supervised learning, The Stanford AI Lab Blog

As another example, consider the popular Midjourney image generator. It was trained on a huge pile of images from all over the Internet, which it then learned from; at first it was given the task of painting simple things and gradually worked its way up to much more sophisticated ones.

The workings of the process within the neural network are as follows. We find a linear function that can paint a particular thing, for example, an arc, and it then uses that to paint a leaf and so on. This function did not exist before the model was trained – the model itself created it.

Then when you want something else after you have pre-trained the model in this way, it makes it easier for it. Suddenly it doesn’t need a vast number of input examples, but it can cope with a few. For instance, if given the task of drawing a human face, it will use an existing linear model, but instead of creating a leaf, it will create a piece of an ear.

The algorithm had to change to adapt to the new task to do this. That happens somewhere in that middle part of the abstraction, the part we can’t see, that connects the linear functions into a usable output, in this case, the generated image.

When you want something else after the pre-trained model, it makes it easier for it. Suddenly it doesn’t need a vast number of input examples, it can cope with a few.

The algorithm had to change, and that happens somewhere in that middle part of the abstraction, the part we can’t see.

A machine learning model would have to be retrained with new data by default. It is a fundamental principle of algorithms and models work that way by default. As the model is trained, it updates existing parameters as it processes new information. However, with in-context learning, there is no predetermined change in parameters. Thus, the model – at first glance – learns the new task without changing its weights.

Research by MIT, Google Research, and Stanford University scientists shows that these massive neural networks deep down contain smaller, simpler linear models that the model acquired during its initial training. The language model then uses a simple algorithm to train this smaller, linear model to perform the task by using information already in the large model itself.

It is, actually, a model within a model.

Smarter models for less money

Let’s take GPT as an example. The model has hundreds of billions of parameters and was trained on a huge amount of text from the Internet. So if someone presents a new task to the model, it is very likely that the model will be able to respond appropriately. In fact, it has very likely already encountered something similar during training. The model does not learn to respond to new tasks in isolation but repeats patterns it has already seen during its training. In the output, it uses a previously trained smaller model hidden inside the overall model.

But why have researchers yet to learn exactly how LLMs work? Of course, they know in a cursory way, but what happens in neural networks between input and output is partly hidden from the experts. The different layers of interconnected neurons simply “work” somehow – the level of abstraction is already very high indeed. It is not magic, but it is a fascinating phenomenon confirming the advanced nature of artificial neural networks; it is a surprisingly intelligent process we have yet to understand in depth. It makes neural networks and large language models all the more interesting.

In-context learning is potentially one of the most important functions of neural networks. If understood thoroughly, it could be applied so that models do not need to be retrained for new tasks, which is otherwise quite expensive.

The time is rapidly approaching when AI will become even more prominent in everyday human life. Our knowledge, understanding, and, likely, the nature of work and productivity will change. General artificial intelligence, a concept that has fascinated and terrified science fiction authors for generations, is steadily approaching. The issue is time and the limits humans set for themselves: The better we understand AI, the safer, better and more sustainably we can use the latest technology.

Next up from prg.ai

Typical Prague AI firm is young, self-sufficient, and export oriented, shows our new comprehensive study

130 companies, 11 interviews, 9 business topics. Explore all that and more in the unique study authored by prg.ai, which contains an overview of last year's most notable events on the local AI scene or articles on the future of AI or gender equality in research.

prg.ai newsletter #41

The first spring edition of our newsletter! Get the latest prg.ai updates, exciting news from the Prague AI scene, a curated list of interesting events, open positions, and much more. Stay in the loop!

prg.ai newsletter #40

The fortieth milestone issue of the prg․ai newsletter is packed with news and intriguing facts not only from the Prague AI scene. Keep reading so you don't miss out on anything!

prg.ai newsletter #39

What did the first month of 2024 bring, and what can you look forward to in February? Find out in the next prg.ai newsletter. Check out what's new on the artificial intelligence scene (not only) in Prague.