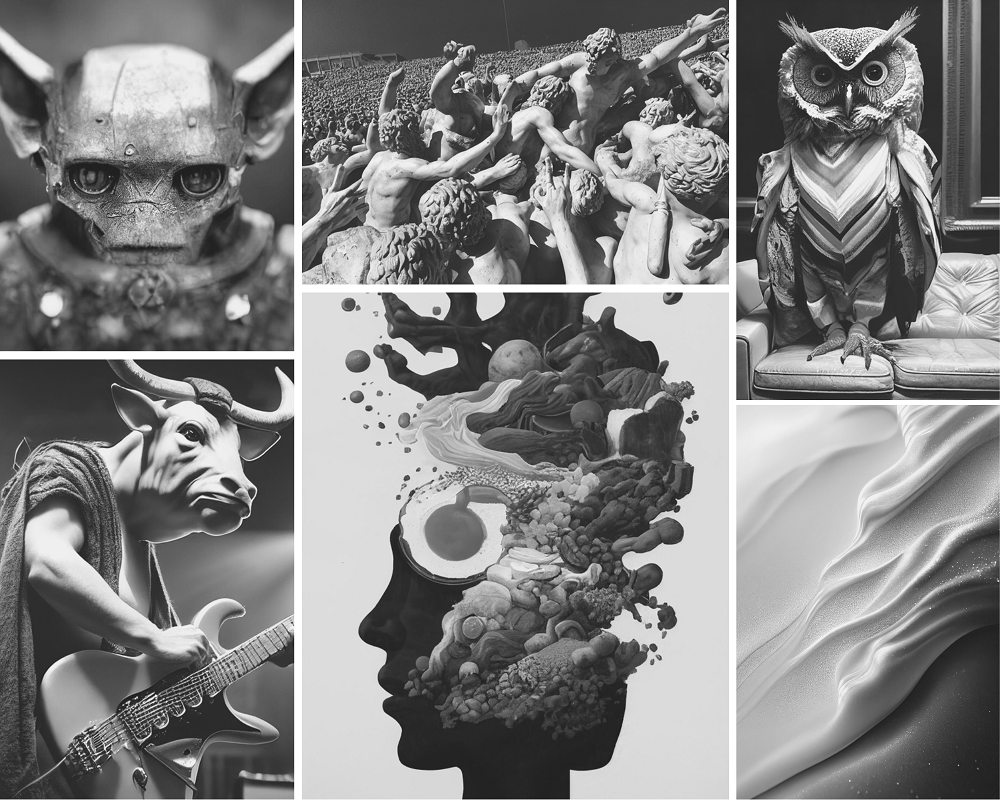

Dali or Dall-E? When Artificial Intelligence Creates Art

What is art? Even philosophers in ancient Greece were not sure of the answer to this question. Neither artificial intelligence, nor tools like DALL-E 2 or MidJourney, which push the boundaries of human creativity, entered their fiery discussions.

Until recently, it was believed that art and related creative fields were exclusively a human affair. But this is rapidly changing, thanks to advances in artificial intelligence. It is still largely a human-influenced affair – when AI algorithms generate works of art or literature, they do so based on input data selected and created by humans. But what goes on in the depths of the algorithms is something that is often not understood even by the most knowledgeable today. This is also why algorithms create art that may be familiar, i.e. in a recognizable style, but is nevertheless quite unique.

Product of an artificial mind

A few years ago, Christie’s auction house sold the first image created by artificial intelligence: a blurry face called Edmond de Belamy. The final price? 432,500 dollars, equivalent to just over 10 million crowns. So computer art is no longer just an innovative experiment, but a real business that interests art collectors.

For each generator, the algorithms work differently, and the exact process of creating such AI works is often unknown. In the case of the Edmond de Belamy portrait, for example, several Parisian painters took thousands of real portraits from art history and used them as a database on which they then trained their algorithms. The artist then interpreted the artistic data, in part, in his own way, to create a unique portrait.

That’s the fascinating part: the moment when the data and instructions are put into the algorithm, and the algorithm is then already learning on its own. That’s why machine learning, or more specifically deep learning, is such a revolutionary technique – it’s not just about what input data the algorithm has, but also exactly how it handles it.

The drive to use AI in the arts is far from new. One of the first projects of this kind, the AARON system developed by Harold Cohen, was created in the late 1960s. But this type of program was a “classical” algorithm: it was a program that was given data and instructions, which it then followed exactly.

Only today can developers write algorithms that learn a particular kind of aesthetic by analyzing thousands of input images and then generating new images from them in the style they have learned. That’s why you can see very different but similar results in MidJourney or DALL-E 2 and other programs – because the algorithms have determined an overall aesthetic from the input data and sophisticated rules that they then follow to generate images.

Well, mostly. Sometimes it doesn’t work very well, due to the limits imposed by current machine learning methods. However, these will continue to improve, which is ultimately their purpose.

𝒎𝒊𝒏 𝑮 𝒎𝒂𝒙 𝑫 𝔼𝒙 [𝒍𝒐𝒈 𝑫 (𝒙))] + 𝔼𝒛 [𝒍𝒐𝒈(𝟏 − 𝑫(𝑮(𝒛)))],

Portrait of Edmond de Belamy, from La Famille de Belamy (2018). Courtesy of Christie’s Images Ltd.;

Deep learning, neural networks and “creative” algorithms

Most of the older, though realistically only a few years old, and a good portion of the newer art generators use what are known as Generative Adversarial Networks or GANs. These first appeared only in 2014 and are considered to be among the most advanced machine learning models. Among other things, they also pose a noticeable threat in the area of disinformation, for example, due to the ease with which they enable the creation of so-called deepfakes.

Let’s see how GAN-based algorithms work by example. Suppose an artist-programmer embeds thousands of landscape paintings from different artists and in different styles into the algorithm during the training phase. The algorithm processes this input data, analyzes it according to instructions, and then produces a wide range of output images.

The programmer then helps the algorithm choose which outputs are most appropriate and which to use further. The programmer’s hand is very strong here, essentially a similar process to the teacher-learning model.

Newer programs are then often built on the VQGAN+CLIP technology, which is used, for example, by NightCafe Creator, one of the most popular applications in the field of AI art generation. In a nutshell, these are two different machine learning algorithms that work together to help create an image based on text input. VQGAN is a neural network based on the GAN model, and CLIP is also a neural network that actually replaces human input here – it judges on its own how well the text input matches the generated image. This removes another layer of human input, and the art is indeed gradually becoming robotic.

What can each AI art generator do?

Night Cafe

In the case of Night Cafe, you first enter the text input, then select the desired style, and finally click the Create button. And you’re done in a moment. In our experiment, we tried out what the AI thinks a train station in an enchanted forest looks like – all in a steampunk aesthetic.

The images created with Night Cafe are simple but appealing, and the program is partially free to use. It works on a system of credits that can be generated in a variety of ways. It is one of the most accessible forms of modern image generators.

DALL-E 2

Nowadays, it is a famous programme, but it belongs to the elite. It’s just hard to actually access it – you have to register on a waiting list and it’s not clear how quickly you can get to the app. The author of this article has been “on the waiting list” for over a month.

DALL-E 2 is really impressive, and can modify the resulting art according to the user’s requirements. It can also generate a fascinating array of variations on the original input image, often in truly unusual ways.

Midjourney

Midjourney works very similarly to DALL-E, and unlike DALL-E, the service is available in a partially free version. The user has to pay if he wants to use Midjourney regularly.

At the same time, it is not so easy to create art, as you need an account on the chat service Discord and an understanding of the basic commands for bots. But the result is worth it. Midjourney produces consistently beautiful, often quite abstract results.

Wombo Dream

The most accessible of all the services we’ve tested, Wombo Dream is an extremely simple app to use, and can achieve very good results. It is the Dream app that social networks like TikTok and Twitter are currently full of. It’s not the first time that Wombo’s parent company has achieved popularity there – after all, the Canadian company specialises in creating fun deepfakes from selfies.

The problem with the service, however, is the strange terms and conditions. We recommend proceeding with caution in this case, especially if you intend to use AI-generated art for commercial purposes or anywhere public.

Stable Diffusion

The open-source project Stable Diffusion can generate absolutely fantastic results, but for now it is mainly for the more capable layman. Running it on your own computer is not easy and requires some serious hardware.

But when the first user-friendly version comes to light, we can expect Stable Diffusion to become one of the most popular services of its kind. It uses the massive LAION-5G dataset of more than 5 billion publicly available images from the Internet to generate the images. Its common sources include Pinterest, DeviantArt and various libraries like Getty Images.

Next up from prg.ai

Typical Prague AI firm is young, self-sufficient, and export oriented, shows our new comprehensive study

130 companies, 11 interviews, 9 business topics. Explore all that and more in the unique study authored by prg.ai, which contains an overview of last year's most notable events on the local AI scene or articles on the future of AI or gender equality in research.

prg.ai newsletter #41

The first spring edition of our newsletter! Get the latest prg.ai updates, exciting news from the Prague AI scene, a curated list of interesting events, open positions, and much more. Stay in the loop!

prg.ai newsletter #40

The fortieth milestone issue of the prg․ai newsletter is packed with news and intriguing facts not only from the Prague AI scene. Keep reading so you don't miss out on anything!

prg.ai newsletter #39

What did the first month of 2024 bring, and what can you look forward to in February? Find out in the next prg.ai newsletter. Check out what's new on the artificial intelligence scene (not only) in Prague.