Marek Rosa: In search of general artificial intelligence

Questions surrounding general artificial intelligence divide the professional public. Can an autonomous system ever reach human versatility? Marek Rosa believes that it is not only possible, but also desirable. To develop and study general artificial intelligence, he founded GoodAI and leads an international research fund sponsoring researchers around the world.

At GoodAI, you focus on the development of artificial general intelligence. What does that term mean?

The usual contrast is between so-called ‘narrow AI’ versus ‘general AI’. Narrow AI is a system which utilises a learning algorithm and can learn to complete a specific task independently of its programmer. As a developer of narrow AI, you purposefully train your system for this very specific task and know how to measure AI’s performance in this particular context.

In the case of general AI, we are assuming that we don’t know what the task will be. We aim to create an artificial intelligence (AI) that can adapt to any kind of task it may come across in the future, or invent its own task and prepare itself for future tasks that may somehow resemble tasks from its history.

So general AI is somehow similar to the functioning of the human brain.

We hope that one day, we’ll just explain to the AI what we want, and the AI will start solving the task independently. Compared to today’s narrow AI systems, general AI is assumed to have cognitive computing capacities well beyond today’s AI systems. It should be able to solve tasks which are impossible for today’s algorithms, it should train itself independently, and provide tremendous cost benefits in terms of labour.

What do you see as the most important practical and commercial uses of general AI?

In my opinion, general AI can be used for almost anything. And that is why it interests me so much: its vast potential applicability. That’s the idea. It could work in areas as diverse as medicine or entertainment, cover everything from resource mining to scientific analysis, or even politics. With general AI, everything which could and should be automated can be managed by one system.

Are there many companies focusing on the development of general AI?

The overwhelming majority currently focuses on narrow AI. Only a few companies or research groups, as far as I know, focus on general AI. Though I should say things are changing slowly but surely — in the past two or three years a growing amount of people are working on problems of metalearning, continual learning, or other problems related to general AI. Many of these groups are not claiming to work on general AI outright, but are working on projects which aim to improve various learning capabilities of AI. That type of work could be considered a necessary step to developing a general AI model as we envision it.

How does the development process of general AI vary from working on narrow AI?

With narrow AI, you usually have a very clear objective as to what the AI should achieve. If it is meant to recognise images, it should eventually be able to sort out pictures into categories and classes, or identify certain objects in them. As for general AI, it’s usually very hard to define the final objective and function. That’s one problem.

Another is that many people do not believe in the possibility of general AI. Many people don’t believe that it is possible to develop AI with human-level capabilities or beyond, especially considering the broad generalizability that would be required. They argue in favour of narrow AI: they claim that if we can train a specific system for each task, there isn’t a real need for a complex multi-purpose solution. The potential of a complex multi-purpose solution appears out of reach because current approaches privilege monolithic systems. This is where GoodAI diverges from convention. We are focused on developing a modular architecture that embodies the cumulative aspect of culture to learn and adapt.

So what’s your approach?

In the case of GoodAI, I decided that I don’t want to build an organisation where one team pursues a specific narrow AI problem, another team focuses on a separate narrow AI project, and maybe eventually the organisation will reach AGI. Once it consolidates all its research work. I wanted to pursue general AI right from the start – even if that means we’ll be completely stuck for the first five years, for example. I wanted to avoid wasting time or getting distracted by solving problems that are not relevant to our goal.

What do you think is going to be your final product?

We are currently in our fundamental research stage: we’re still at the beginning, considering the fundamental science behind general AI. The next stage would result in the formulation of a hypothesis and development of some early technology. You have to test those initial assumptions, of course, usually by applying the technology to certain business cases.

We have some visions, some ideas, even some direction we see as worthy of pursuit. But so far, we cannot prove that we are correct in our vision. All of that work is still in front of us. Considering this, it’s good to ask about the final product. I see the future of our company in a couple stages.

In the later stages, I believe we will have a general AI learning agent that can figure out how to solve a range of different tasks independently. A system that is able to learn how to learn. Ideally, you will be able to explain your problem to the AGI agent without having to give it a complex loss function or feed it a crazy amount of data. The system will find the data it needs for itself, learn how best to approach the issue, and solve the task.

What achievements are you already proud of at GoodAI?

We have what we call Badger architecture, and a framework of how to think about general AI. I think it’s a good one, even as it hasn’t reaped practical results yet. But it definitely helps our team as well as external researchers to have a common language that we can use to talk about general AI, to describe what is happening in the inner and outer loop, or to discuss the difference between learning and inference.

The next step is to ask better questions and form a better hypothesis, carry out better experiments. As of now, we have only carried out some smaller, intermediate experiments with agents made up of multiple links which can form a whole together, communicate, and form a certain emergent motion or movement.

Of course, this isn’t developing general AI yet. But it’s helping us understand the communication, learning, and coordination among agents. The most important thing is to be able to show that the agents can actually learn any kind of task in an open-ended manner. This is what we are aiming for.

You mentioned the so-called Badger architecture. If I understand correctly, it’s a different approach to the learning procedure of AI, right?

Yes. In traditional AI approaches, you usually have a learning algorithm, a dataset, and a modifiable neural network. After you’re done training the neural network, it can perform a specified task and generate the correct output. Within our Badger architecture, the basics are still there, but the approach is slightly different.

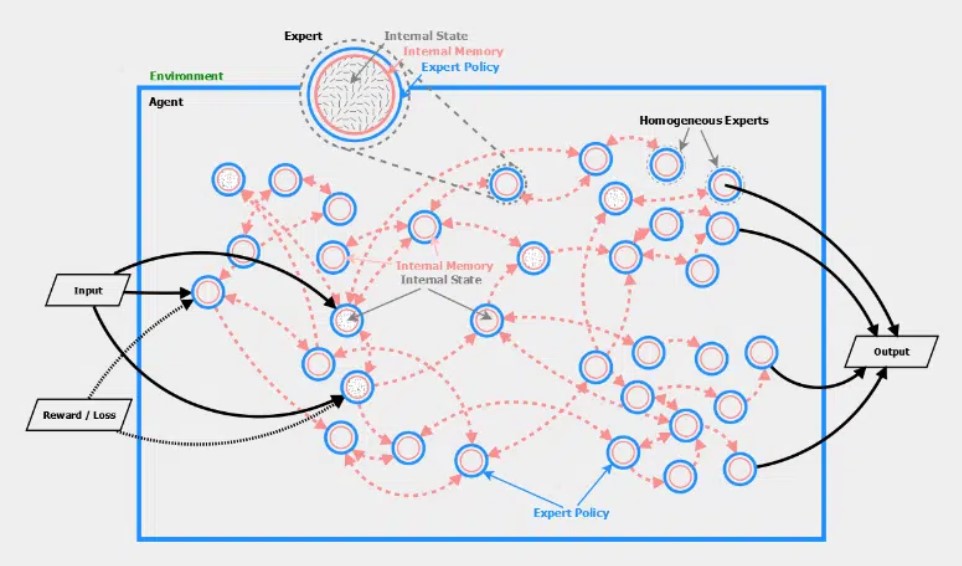

In the context of Badger, you have an agent which is a single brain made from many experts. And all these experts are also subagents who have the same policy, which means that they have the same neural network with the same pathways, but different internal states. It is helpful to think about it in terms of neuroscience.

All experts share the same DNA, they are based on the same thing, but each has a different internal state. For example, one is currently activated, another is going to be activated, another has many neurotransmitters of a certain type, while another has others. Each neuron is in a different state in terms of activation, and they also differ in terms of how they are connected to other neurons. But their policy – their internal functioning – is more or less the same everywhere.

What we do in Badger architecture is that we initialise a brain where you have many of these experts – or neurons – and they are the same except they have different states. And then there is the inner loop and outer loop training procedure. An inner loop is something like the lifetime of the agent. During the inner loop we don’t change this expert policy or the neural network that is inside the expert.e keep it fixed. And then there is the outer loop, which is something like evolution – there you can actually change the basic shared DNA. So only in the outer loop are we changing the expert policy of a neural network.

How can this change be useful?

We want these experts to be able to talk to each other and change their internal states. We don’t want the learning to be the result of changing the pathways, we want the change to happen within the agents themselves. Plus, there are also many other advantages. For example, we want the inner loop to be able to solve tasks that the outer loop cannot solve or cannot solve efficiently. When traditional AI scientists are talking about meta-learning, they are referring to fixed learning algorithms that are trying to solve a task. In our case, we want to first learn the learning algorithm and then use it to solve any kind of task.

How was this new approach received by the AI community?

I would say it was received well, but hasn’t caused a revolution. We haven’t received any criticism that would say that it’s a definitional approach, that it won’t work, or that we’ve left something important out. I would say the harshest criticism we’ve gotten so far is that we haven’t yet proven that our approach is more efficient than other alternatives.

One way we can show that our approach has important benefits over other algorithms is that if you increase the number of experts in this agent, it should lead to more improvements in the capabilities of the agents. Generally, people in the AI community have been interested in our unusual approach, but they also want to see some data demonstrating that it’s better in the ways it needs to be. We have some experiments planned through which we can show this.

In 2020, you launched the GoodAI grant programme. Can you talk about the grants you have dispensed so far?

The main idea is that we want to collaborate with other researchers on questions and projects related to Badger. We cannot realistically hire everybody to be part of GoodAI. Researchers are committed to their academic careers, or they are engaged in other long-term projects. We thought that grants would be the easiest option for us as well as them. That is mainly why we launched it, together with our workshop that we hold each summer.

Not all grant submissions are Badger related; we’ve had to reject the ones which stray from our research focus. Recent applications look very promising.

You established GoodAI in Prague in 2014 . Why did you choose Prague instead of Western European cities, where local AI communities may be more advanced?

I didn’t even consider doing it in another country. I started GoodAI eight years ago and back then, the difference between AI communities wasn’t that pronounced. It was a different situation. And I would have even said that there was an AI winter — or autumn — coming. So I thought, all I need is talented people to work with, a good idea to work on, a solid road map, and a comprehensive framework. And we can continue working no matter what happens. If we need people from different countries, we can always try to get them to Prague, or maybe establish a branch elsewhere. Actually, I am now considering opening a branch in the United States or Canada.

Are you thinking about this because you don’t have access to top talent here in Prague?

I wouldn’t say that. We are looking for people who already have experience in very specific subdomains of AI tailored to our Badger framework. There aren’t many of them in Prague, though there are some in other countries. We try to approach them through the grants program, for example. Even if we open a branch in, let’s say, Montreal, it will be challenging to reach those few expert researchers since they are usually already well-established in their careers. In other words, it’s not that we want just anybody who is good at AI. We are looking for a very specific research profile.

“Overall, I would like to see scientists in this country to be more willing to take risks and take on fundamental research.”

On that note, what do you think about Czech education institutions and academic research institutions in terms of AI?

I think the situation is good — but, of course, anything can be improved. Where I personally would like to push more is to get more attention on fundamental research and less on applied research projects. I would really like to see more scientists who are doing fundamental research in AI and who are also more institutionally risk-tolerant.

Overall, I would want our people in this country to be more willing to take risks and do fundamental research. That is the way through which one could do something extraordinary and unique. That should be one of the important metrics for choosing new projects: not because I like it, or because it can make me money. Instead, we should choose research projects because other people are not doing it. With such a metric, the research gets interesting.

Can there be general AI without narrow AI, and vice versa?

Actually, I think narrow AI is very useful for general AI. In some ways I think general AI is just narrow AI where the task is how to improve the learning algorithms. When developing narrow AI, you are focusing on some practical task, like self-driving cars, image recognition or sound recognition, for example.

With general AI, you often use the same tools directed toward developing a learning algorithm to be used on itself or for some other tasks. Therefore, I think the methods and approaches associated with narrow AI are extremely useful for us. Researchers working on narrow AI are building the tools, the hardware, and the science, which is definitely useful for us.

What are you working on now and what’s on the horizon for GoodAI in the near future?

We’ve been working very hard on existing projects like the VeriDream project, which is a EU-funded consortium building AI-aided solutions in robotics. Together with Sorbonne University, we’ve been working on Quality Diversity algorithms that will improve the movement of robotic legs, with applications for video games and more.

Then there’s the AI Game project. The vision has been to make a game where the main game mechanics would be about training game agents, teaching them, and that they would eventually do it autonomously in a game environment. Recently, we switched our approach to working with pre-trained language models.

In this scenario, the agent understands what we will write to him and can somehow translate it into actions that are possible in his game world. If I write to him to pick up an axe and kill, he’ll do it when he’s in the mood. If he’s not, I will have to convince him. I can try to please him and flatter him somehow, or I can blackmail him, telling him that if he doesn’t kill, I’ll kill his wife.

I’ve given some extreme examples, but they’re funny ones. So it’s starting to look like something, but I think we still have more revolutionary steps ahead of us. We’re trying to find the balance between making a game where you train AI and having it be fun.

And lastly, we are exploring how to put our knowledge and research in collective and multi-agent systems to practice. We’ve started looking into drone technology, since we believe that this area is ripe for rapid innovation and prototyping. We’re interested in applications where you need better situational awareness and information-gathering, with groups of drones acting like carriers of different kinds of sensors, complementing one another. At this stage we’re busy identifying scenarios where we can add value with such technology, and moreover some of us at GoodAI are passionate about robotics and drones, so it’s just a natural step for us.

Next up from prg.ai

Typical Prague AI firm is young, self-sufficient, and export oriented, shows our new comprehensive study

130 companies, 11 interviews, 9 business topics. Explore all that and more in the unique study authored by prg.ai, which contains an overview of last year's most notable events on the local AI scene or articles on the future of AI or gender equality in research.

prg.ai newsletter #41

The first spring edition of our newsletter! Get the latest prg.ai updates, exciting news from the Prague AI scene, a curated list of interesting events, open positions, and much more. Stay in the loop!

prg.ai newsletter #40

The fortieth milestone issue of the prg․ai newsletter is packed with news and intriguing facts not only from the Prague AI scene. Keep reading so you don't miss out on anything!

prg.ai newsletter #39

What did the first month of 2024 bring, and what can you look forward to in February? Find out in the next prg.ai newsletter. Check out what's new on the artificial intelligence scene (not only) in Prague.